At ValidExamDumps, we consistently monitor updates to the Snowflake ARA-R01 exam questions by Snowflake. Whenever our team identifies changes in the exam questions,exam objectives, exam focus areas or in exam requirements, We immediately update our exam questions for both PDF and online practice exams. This commitment ensures our customers always have access to the most current and accurate questions. By preparing with these actual questions, our customers can successfully pass the Snowflake SnowPro Advanced: Architect Recertification exam on their first attempt without needing additional materials or study guides.

Other certification materials providers often include outdated or removed questions by Snowflake in their Snowflake ARA-R01 exam. These outdated questions lead to customers failing their Snowflake SnowPro Advanced: Architect Recertification exam. In contrast, we ensure our questions bank includes only precise and up-to-date questions, guaranteeing their presence in your actual exam. Our main priority is your success in the Snowflake ARA-R01 exam, not profiting from selling obsolete exam questions in PDF or Online Practice Test.

An Architect is implementing a CI/CD process. When attempting to clone a table from a production to a development environment, the cloning operation fails.

What could be causing this to happen?

Cloning a table with a masking policy can cause the cloning operation to fail because the masking policy is not automatically cloned with the table. This is due to the fact that the masking policy is considered a separate object with its own set of privileges1.

Reference

Snowflake Documentation on Cloning Considerations1.

A company is designing its serving layer for data that is in cloud storage. Multiple terabytes of the data will be used for reporting. Some data does not have a clear use case but could be useful for experimental analysis. This experimentation data changes frequently and is sometimes wiped out and replaced completely in a few days.

The company wants to centralize access control, provide a single point of connection for the end-users, and maintain data governance.

What solution meets these requirements while MINIMIZING costs, administrative effort, and development overhead?

The most cost-effective and administratively efficient solution is to use a combination of native and external tables. Native tables for reporting data ensure performance and governance, while external tables allow for flexibility with frequently changing experimental data. Creating roles with specific grants to datasets aligns with the principle of least privilege, centralizing access control and simplifying user management12.

Reference

* Snowflake Documentation on Optimizing Cost1.

* Snowflake Documentation on Controlling Cost2.

An Architect needs to meet a company requirement to ingest files from the company's AWS storage accounts into the company's Snowflake Google Cloud Platform (GCP) account. How can the ingestion of these files into the company's Snowflake account be initiated? (Select TWO).

To ingest files from the company's AWS storage accounts into the company's Snowflake GCP account, the Architect can use either of these methods:

The other options are not valid methods for triggering Snowpipe:

1: SnowPro Advanced: Architect | Study Guide8

2: Snowflake Documentation | Snowpipe Overview9

3: Snowflake Documentation | Using the Snowpipe REST API10

4: Snowflake Documentation | Loading Data Using Snowpipe and AWS Lambda11

5: Snowflake Documentation | Supported File Formats and Compression for Staged Data Files12

6: Snowflake Documentation | Using Cloud Notifications to Trigger Snowpipe13

7: Snowflake Documentation | Loading Data Using COPY into a Table

:SnowPro Advanced: Architect | Study Guide

:Loading Data Using Snowpipe and AWS Lambda

:Supported File Formats and Compression for Staged Data Files

:Using Cloud Notifications to Trigger Snowpipe

:Loading Data Using COPY into a Table

A Developer is having a performance issue with a Snowflake query. The query receives up to 10 different values for one parameter and then performs an aggregation over the majority of a fact table. It then

joins against a smaller dimension table. This parameter value is selected by the different query users when they execute it during business hours. Both the fact and dimension tables are loaded with new data in an overnight import process.

On a Small or Medium-sized virtual warehouse, the query performs slowly. Performance is acceptable on a size Large or bigger warehouse. However, there is no budget to increase costs. The Developer

needs a recommendation that does not increase compute costs to run this query.

What should the Architect recommend?

Enabling the search optimization service on the table can improve the performance of queries that have selective filtering criteria, which seems to be the case here. This service optimizes the execution of queries by creating a persistent data structure called a search access path, which allows some micro-partitions to be skipped during the scanning process. This can significantly speed up query performance without increasing compute costs1.

Reference

* Snowflake Documentation on Search Optimization Service1.

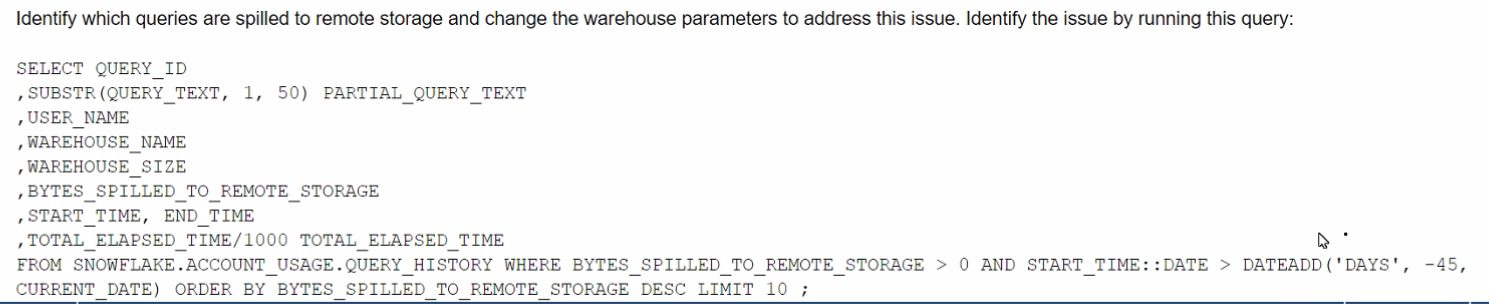

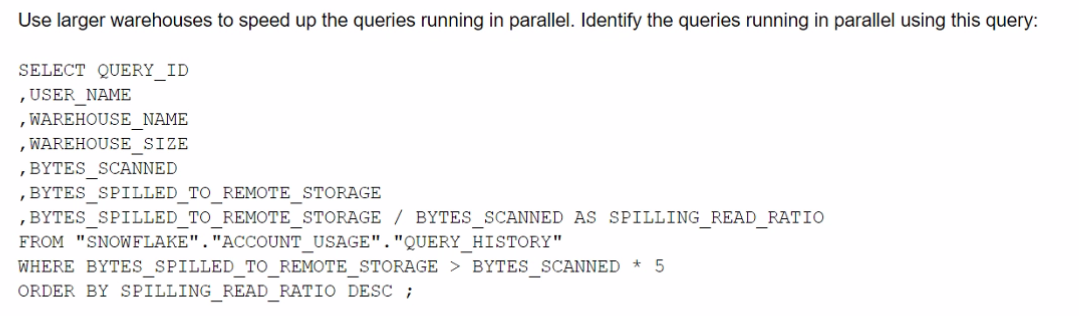

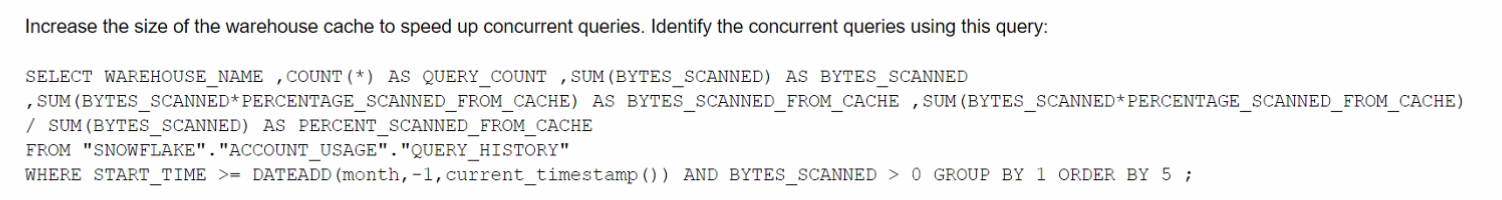

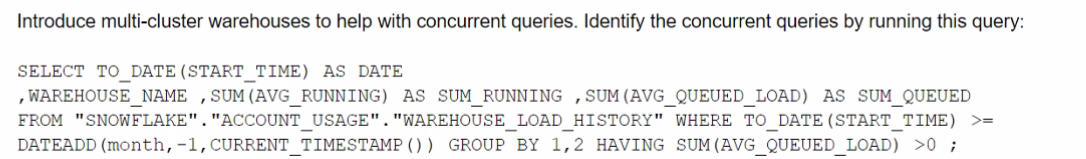

The Business Intelligence team reports that when some team members run queries for their dashboards in parallel with others, the query response time is getting significantly slower What can a Snowflake Architect do to identify what is occurring and troubleshoot this issue?

A)

B)

C)

D)