At ValidExamDumps, we consistently monitor updates to the Salesforce B2C-Commerce-Architect exam questions by Salesforce. Whenever our team identifies changes in the exam questions,exam objectives, exam focus areas or in exam requirements, We immediately update our exam questions for both PDF and online practice exams. This commitment ensures our customers always have access to the most current and accurate questions. By preparing with these actual questions, our customers can successfully pass the Salesforce Certified B2C Commerce Architect exam on their first attempt without needing additional materials or study guides.

Other certification materials providers often include outdated or removed questions by Salesforce in their Salesforce B2C-Commerce-Architect exam. These outdated questions lead to customers failing their Salesforce Certified B2C Commerce Architect exam. In contrast, we ensure our questions bank includes only precise and up-to-date questions, guaranteeing their presence in your actual exam. Our main priority is your success in the Salesforce B2C-Commerce-Architect exam, not profiting from selling obsolete exam questions in PDF or Online Practice Test.

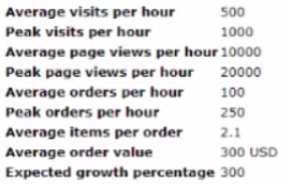

The Client is Crowing and decided to migrate its ecommerce website to B2C Commerce. The Client provided the Architect with the f metrics for its existing website over the past 12 months and forecasted into the next year:

Noting these historical metrics and the forecasted growth of 300%, which load test targets meet best practices for testing the new B2C Commerce site?

Considering the existing metrics and forecasted 300% growth, the appropriate load testing targets for the new B2C Commerce site would be:

15000 visits per hour: This figure is calculated by applying the expected growth to the peak visits per hour (1000 visits), resulting in 4000 visits. The choice of 15000 provides a higher buffer to accommodate unforeseen spikes in traffic.

300000 page views per hour: Similarly, this is scaled up from the peak page views per hour (20000) considering the growth, ensuring the site can handle high demand and interactions.

3750 orders per hour: This target is based on the peak orders per hour (250) with the growth applied, allowing testing of the system's ability to handle transactions under significant load.

These targets ensure that the system is robust enough to handle increased traffic and transactions without performance degradation, crucial for maintaining customer satisfaction and operational stability.

a B2C Commerce developer has Implemented a job that connects to an SFTP, loops through a specific number of .csv rtes. and Generates a generic mapping for every file. In order to keep track of the mappingsimported, if a generic mapping is created successfully, a custom object instance w created with the .csv file name. After running the job in the Development instance, the developer checks the Custom Objects m Business Manager and notices there Isn't a Custom Object for each csv file that was on SFTP.

What are two possible reasons that some generic mappings were not created? Choose 2 answers

Two plausible reasons for some generic mappings not being created despite the SFTP job running are: A) the system reached its limit for the maximum number of generic mappings allowed, and C) there was an invalid format in one or more of the .csv files processed. When the maximum threshold for mappings is reached, the system cannot create additional mappings, thus stopping any further imports from being registered as custom objects. Additionally, if .csv files are incorrectly formatted, the job would fail to create mappings for those files, leading to the absence of corresponding custom objects in Business Manager. It's crucial to ensure that file formats adhere to expected specifications and that system limits are adequately managed to avoid such issues.

A B2C Commerce developer has recently completed a tax service link cartridge integration into a new SHU site. During review, the Architect notices the basket calculation hook is being run multiple times during a single tax call.

What is the reason for the duplicate calculations being run?

If multiple hook.js files are referring to the same basket calculation hook within a LINK cartridge integration, it could lead to the hook being executed multiple times during a single tax call. This often occurs due to redundancy in the integration, where multiple scripts are set to trigger the same function, inadvertently causing duplicate calculations. It's essential to ensure that only one script is responsible for invoking specific hooks to prevent this kind of redundancy and inefficiency in the system.

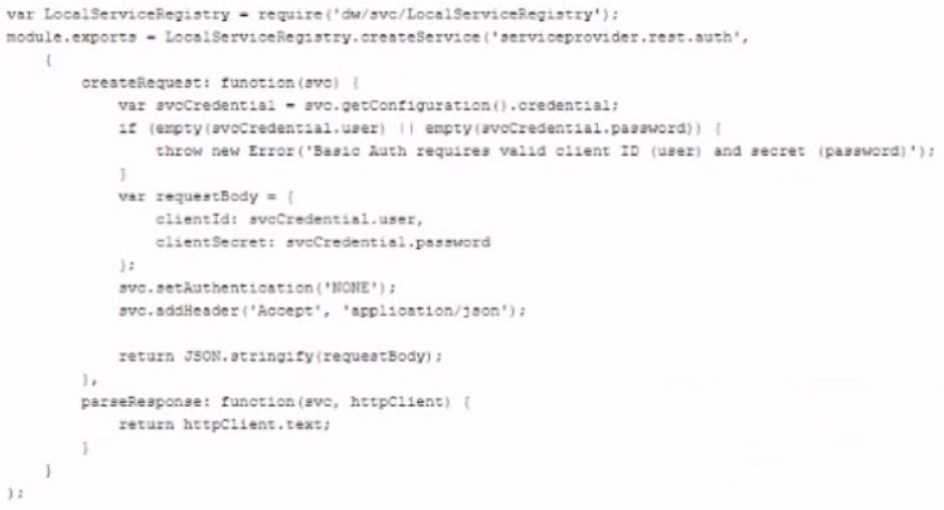

An integration cartridge implements communication between the B2C Commerce Storefront and a third-party service provider. The cartridge contains the localServiceRegistry code:

How does this code sample accomplish authentication to the service provider?

The code sample shows the creation of a service request to a third-party service provider, where the authentication method is explicitly set to 'NONE' using the line svc.setAuthentication('NONE');. This configuration implies that the request does not use Basic Authentication or any embedded credentials like client ID and secret in the HTTP headers for authentication purposes. Instead, it builds the authentication details into the request body, which suggests that the service expects credentials as part of the payload rather than as part of the standard authentication headers, thus effectively disabling Basic Auth for this transaction.

The client provided these business requirements:

* The B2C Commerce platform will integratewith the Client s Order Management System (OMS).

* The OMS supports Integration using REST services.

* The OMS is hosted on the Clients infrastructure.

Whet configurations are needed for this integration with the OMS?

For integrating the B2C Commerce platform with the client's OMS using REST services, the required configurations include:

Service Configuration: This specifies the service endpoints, HTTP methods, and any other service-specific settings necessary for the integration.

Service Profile Configuration: This defines the behavior of the service such as timeout settings, retry logic, and cache settings.

Service Credential Configuration: This involves setting up the credentials that will be used to authenticate the requests to the client's OMS. These credentials are often managed securely through encrypted storage and retrieval mechanisms.

These configurations ensure secure, efficient, and reliable communication between the B2C Commerce platform and the client's OMS, adhering to best practices in web service integration.