At ValidExamDumps, we consistently monitor updates to the Microsoft DP-600 exam questions by Microsoft. Whenever our team identifies changes in the exam questions,exam objectives, exam focus areas or in exam requirements, We immediately update our exam questions for both PDF and online practice exams. This commitment ensures our customers always have access to the most current and accurate questions. By preparing with these actual questions, our customers can successfully pass the Microsoft Implementing Analytics Solutions Using Microsoft Fabric exam on their first attempt without needing additional materials or study guides.

Other certification materials providers often include outdated or removed questions by Microsoft in their Microsoft DP-600 exam. These outdated questions lead to customers failing their Microsoft Implementing Analytics Solutions Using Microsoft Fabric exam. In contrast, we ensure our questions bank includes only precise and up-to-date questions, guaranteeing their presence in your actual exam. Our main priority is your success in the Microsoft DP-600 exam, not profiting from selling obsolete exam questions in PDF or Online Practice Test.

You have an Amazon Web Services (AWS) subscription that contains an Amazon Simple Storage Service (Amazon S3) bucket named bucketl.

You have a Fabric tenant that contains a lakehouse named LH1.

In LH1, you plan to create a OneLake shortcut to bucketl.

You need to configure authentication for the connection.

Which two values should you provide? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

What should you use to implement calculation groups for the Research division semantic models?

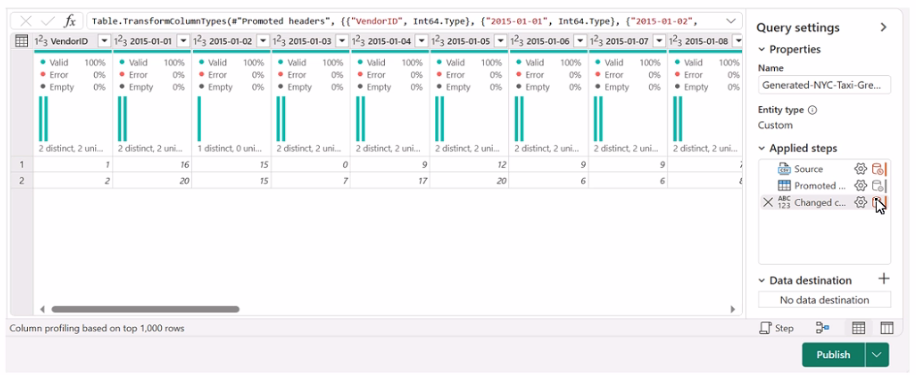

You have a Fabric workspace named Workspace1 that contains a data flow named Dataflow1. Dataflow1 contains a query that returns the data shown in the following exhibit.

You need to transform the date columns into attribute-value pairs, where columns become rows.

You select the VendorlD column.

Which transformation should you select from the context menu of the VendorlD column?

The transformation you should select from the context menu of the VendorID column to transform the date columns into attribute-value pairs, where columns become rows, is Unpivot columns (B). This transformation will turn the selected columns into rows with two new columns, one for the attribute (the original column names) and one for the value (the data from the cells). Reference = Techniques for unpivoting columns are covered in the Power Query documentation, which explains how to use the transformation in data modeling.

You have a Fabric tenant tha1 contains a takehouse named Lakehouse1. Lakehouse1 contains a Delta table named Customer.

When you query Customer, you discover that the query is slow to execute. You suspect that maintenance was NOT performed on the table.

You need to identify whether maintenance tasks were performed on Customer.

Solution: You run the following Spark SQL statement:

REFRESH TABLE customer

Does this meet the goal?

No, the REFRESH TABLE statement does not provide information on whether maintenance tasks were performed. It only updates the metadata of a table to reflect any changes on the data files. Reference = The use and effects of the REFRESH TABLE command are explained in the Spark SQL documentation.

What should you recommend using to ingest the customer data into the data store in the AnatyticsPOC workspace?

For ingesting customer data into the data store in the AnalyticsPOC workspace, a dataflow (D) should be recommended. Dataflows are designed within the Power BI service to ingest, cleanse, transform, and load data into the Power BI environment. They allow for the low-code ingestion and transformation of data as needed by Litware's technical requirements. Reference = You can learn more about dataflows and their use in Power BI environments in Microsoft's Power BI documentation.