At ValidExamDumps, we consistently monitor updates to the Databricks-Machine-Learning-Associate exam questions by Databricks. Whenever our team identifies changes in the exam questions,exam objectives, exam focus areas or in exam requirements, We immediately update our exam questions for both PDF and online practice exams. This commitment ensures our customers always have access to the most current and accurate questions. By preparing with these actual questions, our customers can successfully pass the Databricks Certified Machine Learning Associate Exam exam on their first attempt without needing additional materials or study guides.

Other certification materials providers often include outdated or removed questions by Databricks in their Databricks-Machine-Learning-Associate exam. These outdated questions lead to customers failing their Databricks Certified Machine Learning Associate Exam exam. In contrast, we ensure our questions bank includes only precise and up-to-date questions, guaranteeing their presence in your actual exam. Our main priority is your success in the Databricks-Machine-Learning-Associate exam, not profiting from selling obsolete exam questions in PDF or Online Practice Test.

In which of the following situations is it preferable to impute missing feature values with their median value over the mean value?

Imputing missing values with the median is often preferred over the mean in scenarios where the data contains a lot of extreme outliers. The median is a more robust measure of central tendency in such cases, as it is not as heavily influenced by outliers as the mean. Using the median ensures that the imputed values are more representative of the typical data point, thus preserving the integrity of the dataset's distribution. The other options are not specifically relevant to the question of handling outliers in numerical data. Reference:

Data Imputation Techniques (Dealing with Outliers).

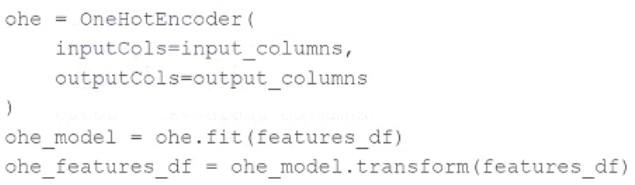

A data scientist wants to use Spark ML to one-hot encode the categorical features in their PySpark DataFrame features_df. A list of the names of the string columns is assigned to the input_columns variable.

They have developed this code block to accomplish this task:

The code block is returning an error.

Which of the following adjustments does the data scientist need to make to accomplish this task?

The OneHotEncoder in Spark ML requires numerical indices as inputs rather than string labels. Therefore, you need to first convert the string columns to numerical indices using StringIndexer. After that, you can apply OneHotEncoder to these indices.

Corrected code:

from pyspark.ml.feature import StringIndexer, OneHotEncoder # Convert string column to index indexers = [StringIndexer(inputCol=col, outputCol=col+'_index') for col in input_columns] indexer_model = Pipeline(stages=indexers).fit(features_df) indexed_features_df = indexer_model.transform(features_df) # One-hot encode the indexed columns ohe = OneHotEncoder(inputCols=[col+'_index' for col in input_columns], outputCols=output_columns) ohe_model = ohe.fit(indexed_features_df) ohe_features_df = ohe_model.transform(indexed_features_df)

A data scientist has been given an incomplete notebook from the data engineering team. The notebook uses a Spark DataFrame spark_df on which the data scientist needs to perform further feature engineering. Unfortunately, the data scientist has not yet learned the PySpark DataFrame API.

Which of the following blocks of code can the data scientist run to be able to use the pandas API on Spark?

To use the pandas API on Spark, which is designed to bridge the gap between the simplicity of pandas and the scalability of Spark, the correct approach involves importing the pyspark.pandas (recently renamed to pandas_api_on_spark) module and converting a Spark DataFrame to a pandas-on-Spark DataFrame using this API. The provided syntax correctly initializes a pandas-on-Spark DataFrame, allowing the data scientist to work with the familiar pandas-like API on large datasets managed by Spark.

Reference

A data scientist has replaced missing values in their feature set with each respective feature variable's median value. A colleague suggests that the data scientist is throwing away valuable information by doing this.

Which of the following approaches can they take to include as much information as possible in the feature set?

By creating a binary feature variable for each feature with missing values to indicate whether a value has been imputed, the data scientist can preserve information about the original state of the data. This approach maintains the integrity of the dataset by marking which values are original and which are synthetic (imputed). Here are the steps to implement this approach:

Identify Missing Values: Determine which features contain missing values.

Impute Missing Values: Continue with median imputation or choose another method (mean, mode, regression, etc.) to fill missing values.

Create Indicator Variables: For each feature that had missing values, add a new binary feature. This feature should be '1' if the original value was missing and imputed, and '0' otherwise.

Data Integration: Integrate these new binary features into the existing dataset. This maintains a record of where data imputation occurred, allowing models to potentially weight these observations differently.

Model Adjustment: Adjust machine learning models to account for these new features, which might involve considering interactions between these binary indicators and other features.

Reference

'Feature Engineering for Machine Learning' by Alice Zheng and Amanda Casari (O'Reilly Media, 2018), especially the sections on handling missing data.

Scikit-learn documentation on imputing missing values: https://scikit-learn.org/stable/modules/impute.html

Which of the following describes the relationship between native Spark DataFrames and pandas API on Spark DataFrames?

Pandas API on Spark (previously known as Koalas) provides a pandas-like API on top of Apache Spark. It allows users to perform pandas operations on large datasets using Spark's distributed compute capabilities. Internally, it uses Spark DataFrames and adds metadata that facilitates handling operations in a pandas-like manner, ensuring compatibility and leveraging Spark's performance and scalability.

Reference