At ValidExamDumps, we consistently monitor updates to the Databricks-Certified-Data-Engineer-Associate exam questions by Databricks. Whenever our team identifies changes in the exam questions,exam objectives, exam focus areas or in exam requirements, We immediately update our exam questions for both PDF and online practice exams. This commitment ensures our customers always have access to the most current and accurate questions. By preparing with these actual questions, our customers can successfully pass the Databricks Certified Data Engineer Associate Exam exam on their first attempt without needing additional materials or study guides.

Other certification materials providers often include outdated or removed questions by Databricks in their Databricks-Certified-Data-Engineer-Associate exam. These outdated questions lead to customers failing their Databricks Certified Data Engineer Associate Exam exam. In contrast, we ensure our questions bank includes only precise and up-to-date questions, guaranteeing their presence in your actual exam. Our main priority is your success in the Databricks-Certified-Data-Engineer-Associate exam, not profiting from selling obsolete exam questions in PDF or Online Practice Test.

A data engineering team has two tables. The first table march_transactions is a collection of all retail transactions in the month of March. The second table april_transactions is a collection of all retail transactions in the month of April. There are no duplicate records between the tables.

Which of the following commands should be run to create a new table all_transactions that contains all records from march_transactions and april_transactions without duplicate records?

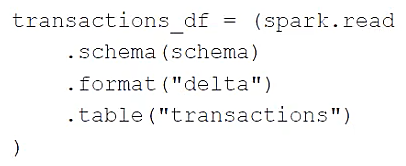

A data engineer is using the following code block as part of a batch ingestion pipeline to read from a composable table:

Which of the following changes needs to be made so this code block will work when the transactions table is a stream source?

A data engineer has developed a data pipeline to ingest data from a JSON source using Auto Loader, but the engineer has not provided any type inference or schema hints in their pipeline. Upon reviewing the data, the data engineer has noticed that all of the columns in the target table are of the string type despite some of the fields only including float or boolean values.

Which of the following describes why Auto Loader inferred all of the columns to be of the string type?

An engineering manager uses a Databricks SQL query to monitor ingestion latency for each data source. The manager checks the results of the query every day, but they are manually rerunning the query each day and waiting for the results.

Which of the following approaches can the manager use to ensure the results of the query are updated each day?

Databricks SQL allows users to schedule queries to run automatically at a specified frequency and time zone. This can help users to keep their dashboards or alerts updated with the latest data. To schedule a query, users need to do the following steps:

In the Query Editor, click Schedule > Add schedule to open a menu with schedule settings.

Choose when to run the query. Use the dropdown pickers to specify the frequency, period, starting time, and time zone. Optionally, select the Show cron syntax checkbox to edit the schedule in Quartz Cron Syntax.

Choose More options to show optional settings. Users can also choose a name for the schedule, and a SQL warehouse to power the query.

Click Create. The query will run automatically according to the schedule.

A data engineer wants to schedule their Databricks SQL dashboard to refresh every hour, but they only want the associated SQL endpoint to be running when It is necessary. The dashboard has multiple queries on multiple datasets associated with it. The data that feeds the dashboard is automatically processed using a Databricks Job.

Which approach can the data engineer use to minimize the total running time of the SQL endpoint used in the refresh schedule of their dashboard?

To minimize the total running time of the SQL endpoint used in the refresh schedule of a dashboard in Databricks, the most effective approach is to utilize the Auto Stop feature. This feature allows the SQL endpoint to automatically stop after a period of inactivity, ensuring that it only runs when necessary, such as during the dashboard refresh or when actively queried. This minimizes resource usage and associated costs by ensuring the SQL endpoint is not running idle outside of these operations.

Reference: Databricks documentation on SQL endpoints: SQL Endpoints in Databricks